Home Assistant

OPEN SOURCE

WEB

PROJECT PITCH

Design and evolve Home Assistant’s core experience so that a powerful, privacy‑first smart home platform feels approachable to both new and advanced users.

COMPANY

Open Home Foundation

ROLE & TEAM composition

Senior Product Designer

3x PM, 3x Product Designers, 4x Front-end

PERIOD

November 2024 - Currently

TOOLS

Lyssna

Google Forms

Code Editors *

Figma Make *

Figma

Protopie

STUDY INDEX

RESPONSIBILITIES

DELIVERABLES

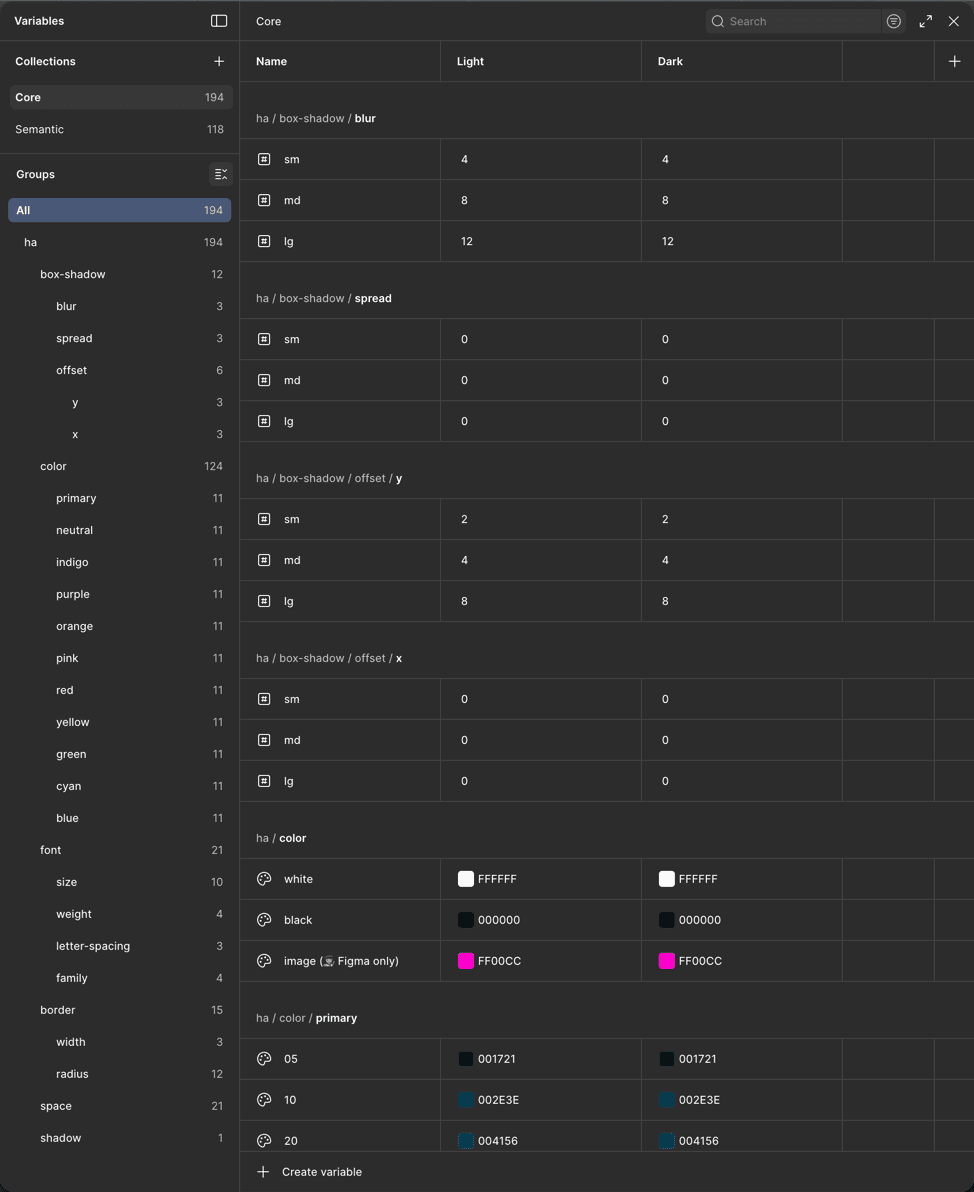

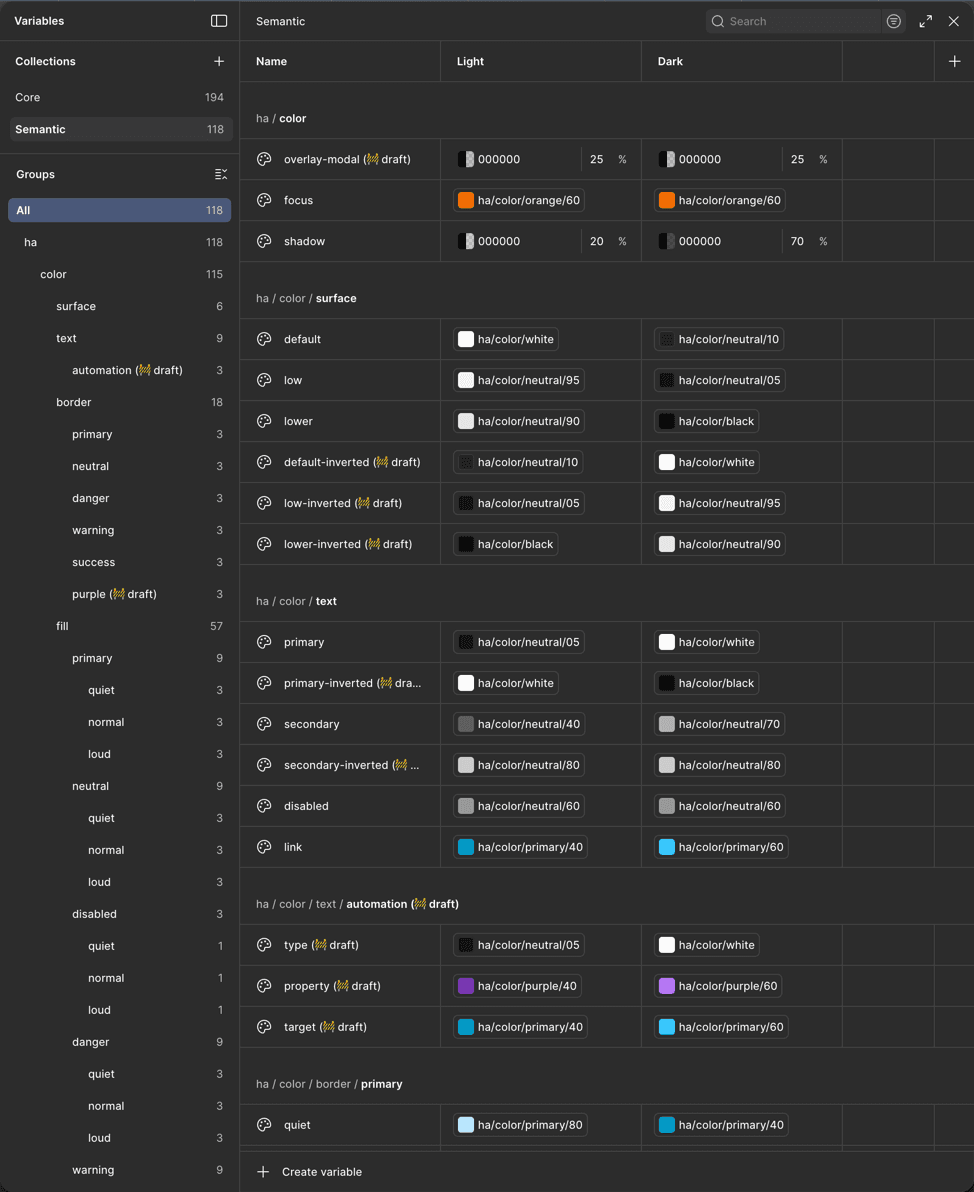

A new token‑based UI kit (base + semantic) aligned with Web Awesome and MD2 evolution

Starting a new Figma UI kit and coordinated migration from the legacy one, accommodating for the newest Figma features for developer hand-off

Research artifacts (surveys, synthesized findings) that informed IA and automation improvements

Production-ready Figma designs linked directly to GitHub PRs

Contributing small PRs addressing UI-related issues

HOW I USED AI ✨

Rapid prototyping: Generated interactive code prototypes with tools like Google Antigravity and Cursor to validate new IA and automation concepts within hours instead of days

Design exploration: Used generative tools to produce draft layouts and flows from structured specs, then refined them to match Home Assistant’s design language

Data Analysis: Synthesized large volumes of user feedback to identify patterns and inform feature improvements

Technical Fluency: Analyzed project repositories to understand UI logic and constraints, ensuring better integration between design and code

STATUS

ONGOING

HOME ASSISTANT DEMO

Empathize

To understand how people really use Home Assistant, the focus here was on listening to different kinds of users. Their stories, questions, and frustrations helped reveal where the experience broke down and what problems were most important to solve first.

Define

While I continued to identify and ship quick wins, like dashboard tweaks and general usability fixes, the Empathize phase revealed deeper structural issues. While the wider team tackled other roadmap goals, I joined forces with a few colleagues and narrowed our focus to two critical areas and defined their problem statements:

Home Assistants' information architecture 🗃️

PROJECT PROBLEM STATEMENT

Surface and clean up the metadata of users' homes, overhauling when and how data is displayed.

Our goal was to ensure the interface provides the full context needed to make decisions, making the system speak "human," not just "machine."

To get user-validated insights, I've conducted a user survey published on Reddit. Among other questions that provided valuable insights on how to fix this problem, the question:

"If you rename devices in Home Assistant, what are your reasons for doing so?" gave the most insight on the scale and nature of the problem.

Most users rename their devices regularly, with the largest group (33%) doing so 'Sometimes' and a combined 60% stating they do so either 'Sometimes' or 'Often' (view source).

IMPACT

After rolling out contextual info in over 7 key areas, users reported a better understanding of devices and their relations to other parts in their homes. This cut confusion, freed the team for evolve and work on the dashboarding experience, and continues improving the daily experience of using Home Assistant.

Automation engine 🤖

PROJECT PROBLEM STATEMENT

Align the automation engine’s learning curve with the evolving needs of our users.

Based on in-depth analysis of one-on-one interviews with users who span different types of knowledge and backgrounds, the results of those interviews provided us with a list of opportunities aimed at solving the problem statement. One of which was to transform the way users perceive and use Triggers and Conditions.

IMPACT

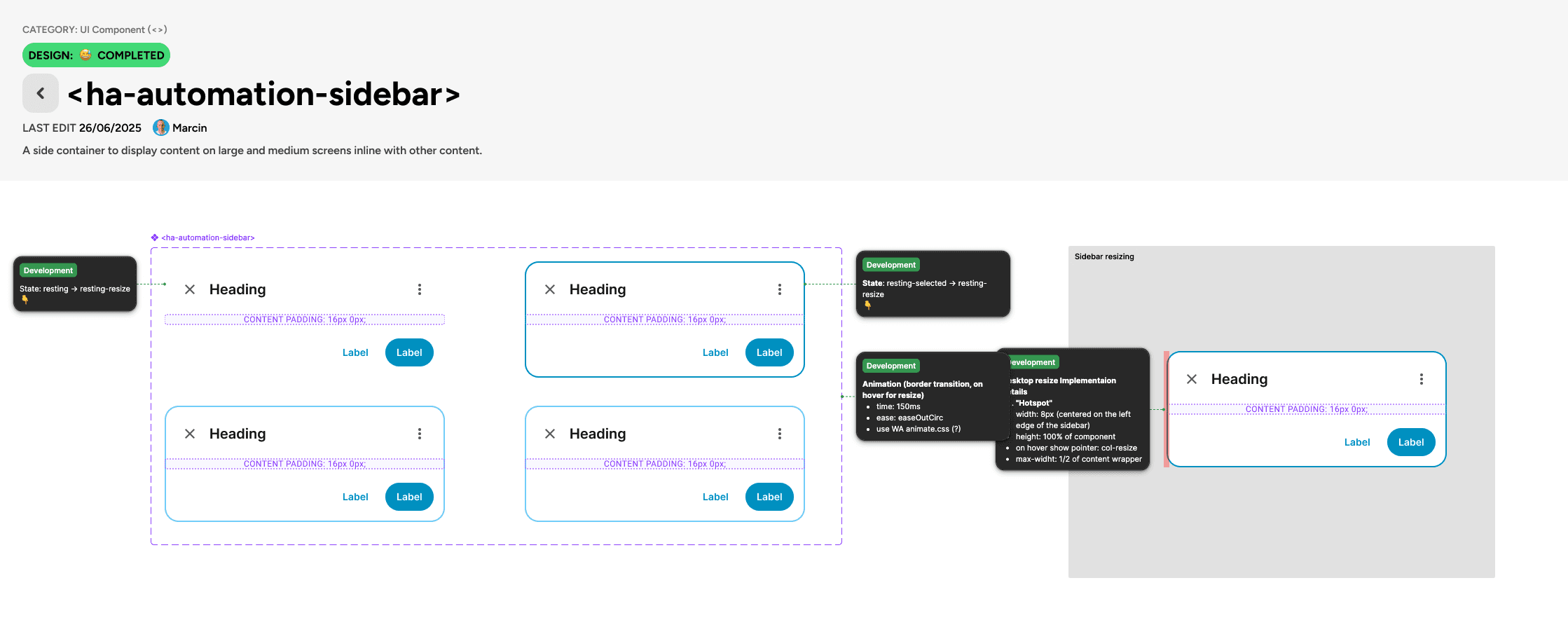

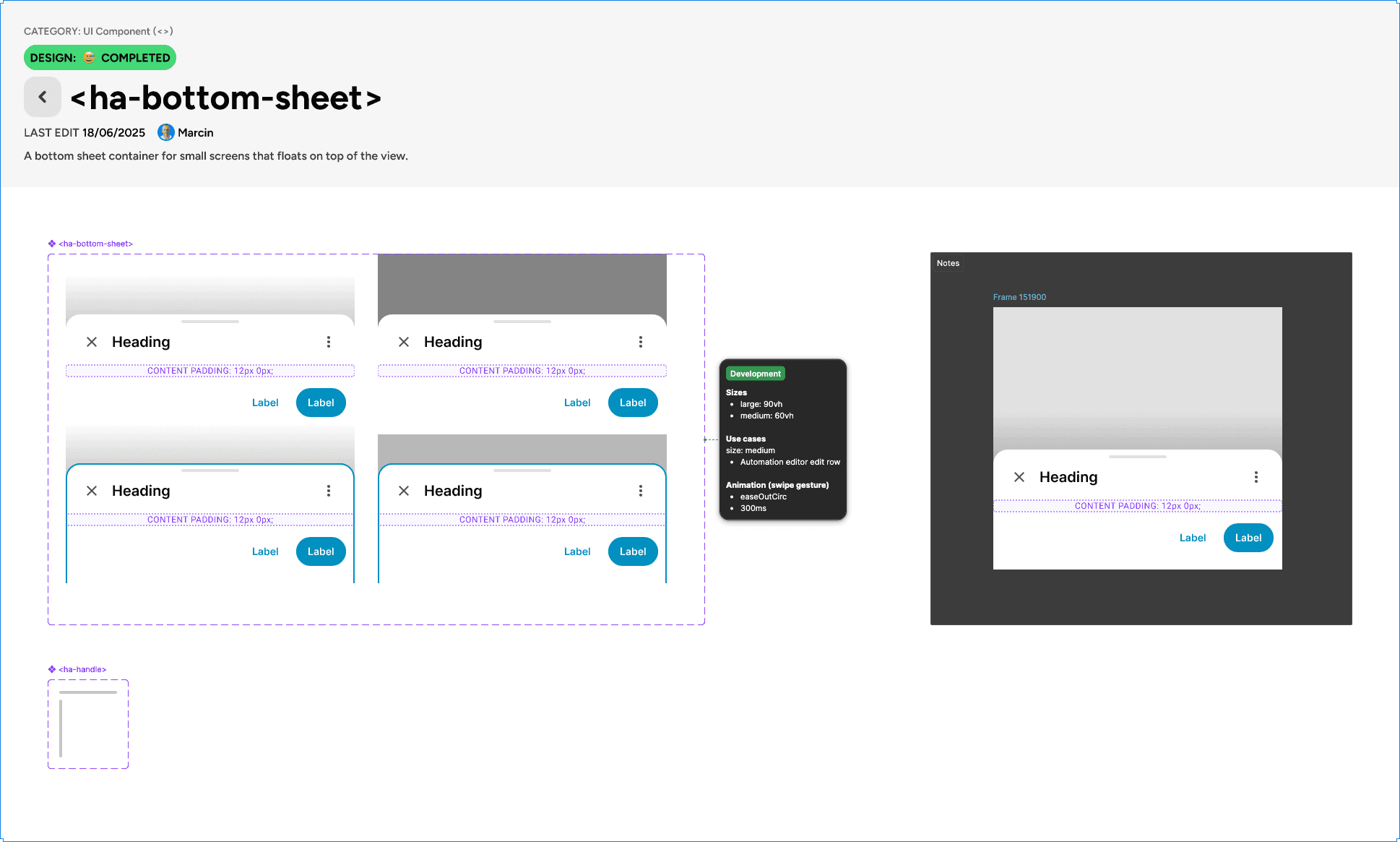

After a 3-month rollout of UI improvements, including a dynamic sidebar that split automations into view/config modes and mobile-optimized patterns, usability scores improved significantly. Users now build and manage automations more intuitively with less cognitive load.

Ideate

With problems prioritized, I sketched out flows, automation patterns, and component updates using tools like Figma designs, Figma Make, and code experiments with agentic code editors - aimed at power users and newcomers alike, while working within our monthly release schedule. Narrowed ideas with PMs and engineers based on impact and fit. Documented what we'd tackle now, defer, or skip to stay focused.

Deliver

With concepts locked in, delivery meant shipping small, steady improvements to general usability, automations, and contextual info, also working tight with maintainers to avoid breaking setups or missing monthly releases. In open-source, this wasn't just handoff; I focused on clear documentation, quality checks, and guiding volunteer contributors to match design standards. This got real value to users faster while ensuring consistency.

dELIVER: TOKENS

Lead and introduced a two‑layer token system (base + semantic, for light and dark themes) aligned with the Web Awesome library, enabling theming and reducing hard‑coded values

IMPACT

Significantly reduced single-time usage of hard-coded values (DRY)

Made the work of developers simpler and reduced inconsistencies in the codebase

Laid groundwork for reusing tokens for other components

Introduced a systematized way of theming

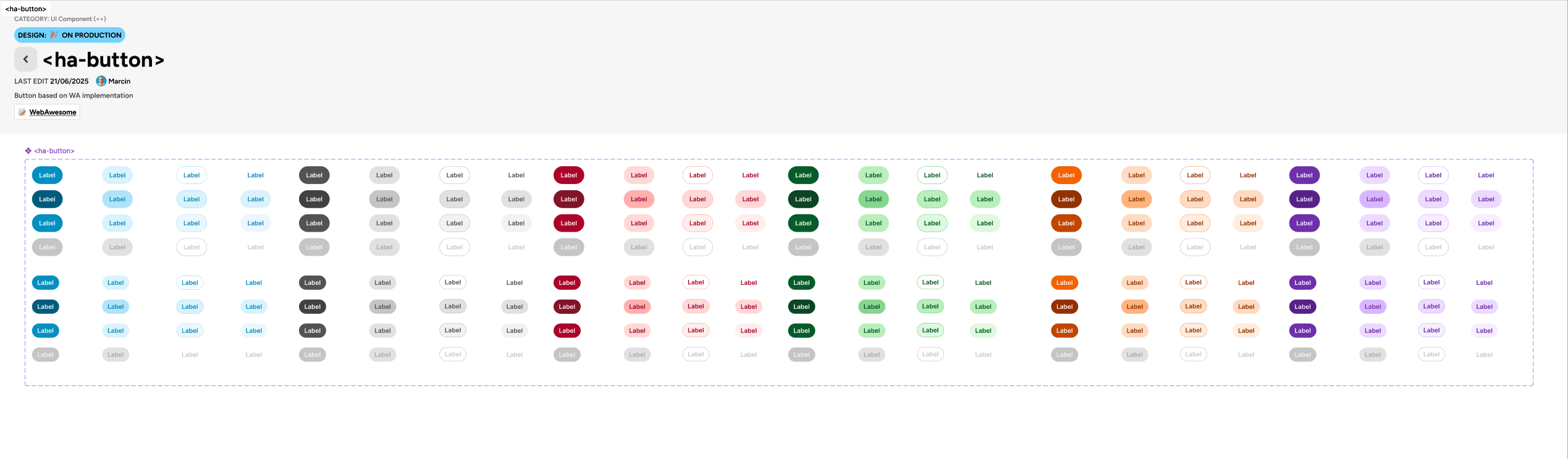

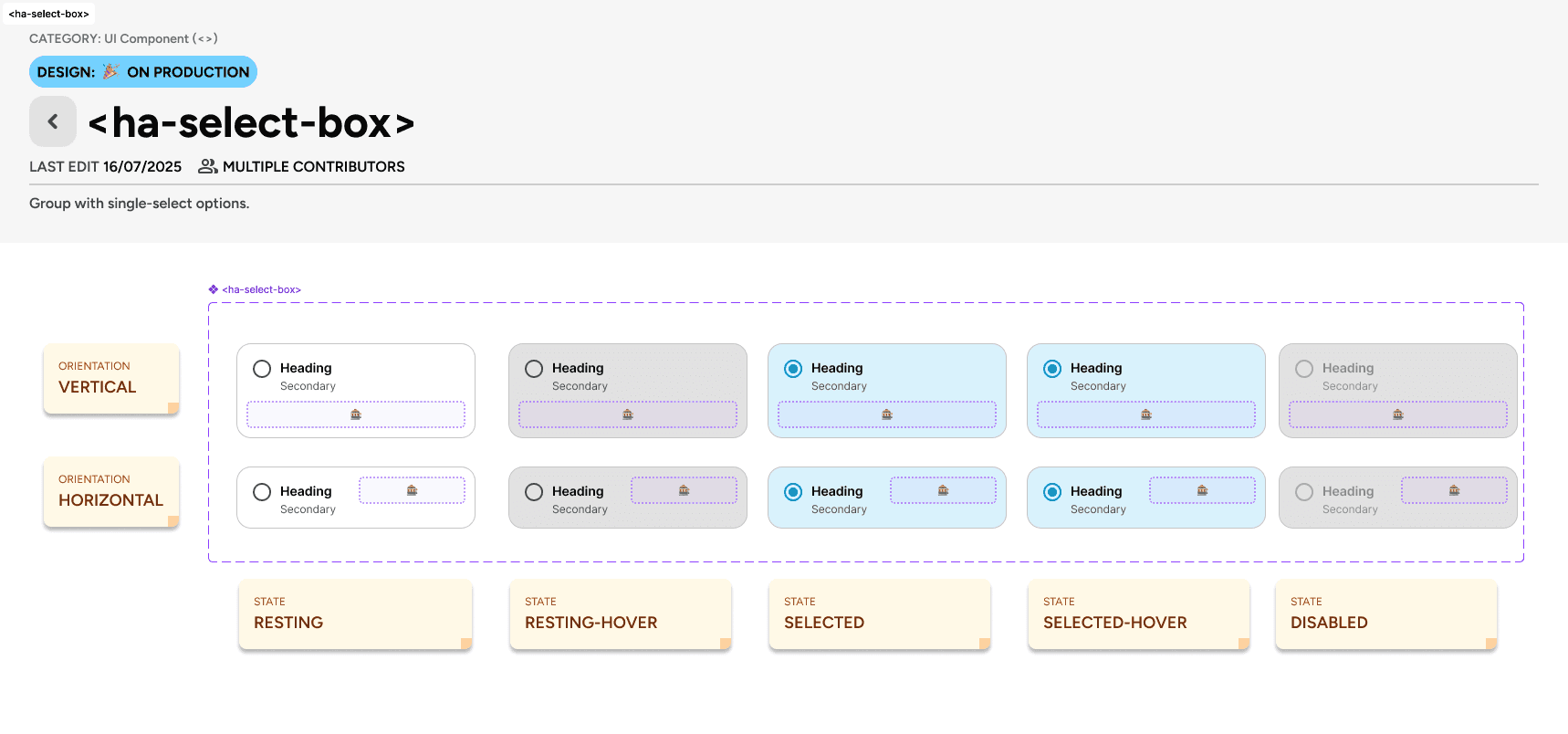

DELIVER: UI KIT

Created and implemented a new UI kit based on new tokens and components

IMPACT

Introduction of new components improved the user-experience on desktop and mobile and helped in displaying information dense screens (i.e.: automation editor)

Using the "slot" method in Figma components removed the need to detach components from the library, making the design files more future-proof, and reduced maintenance for designers

DELIVER: CONTEXTUAL INFORMATION

Information architecture workstream

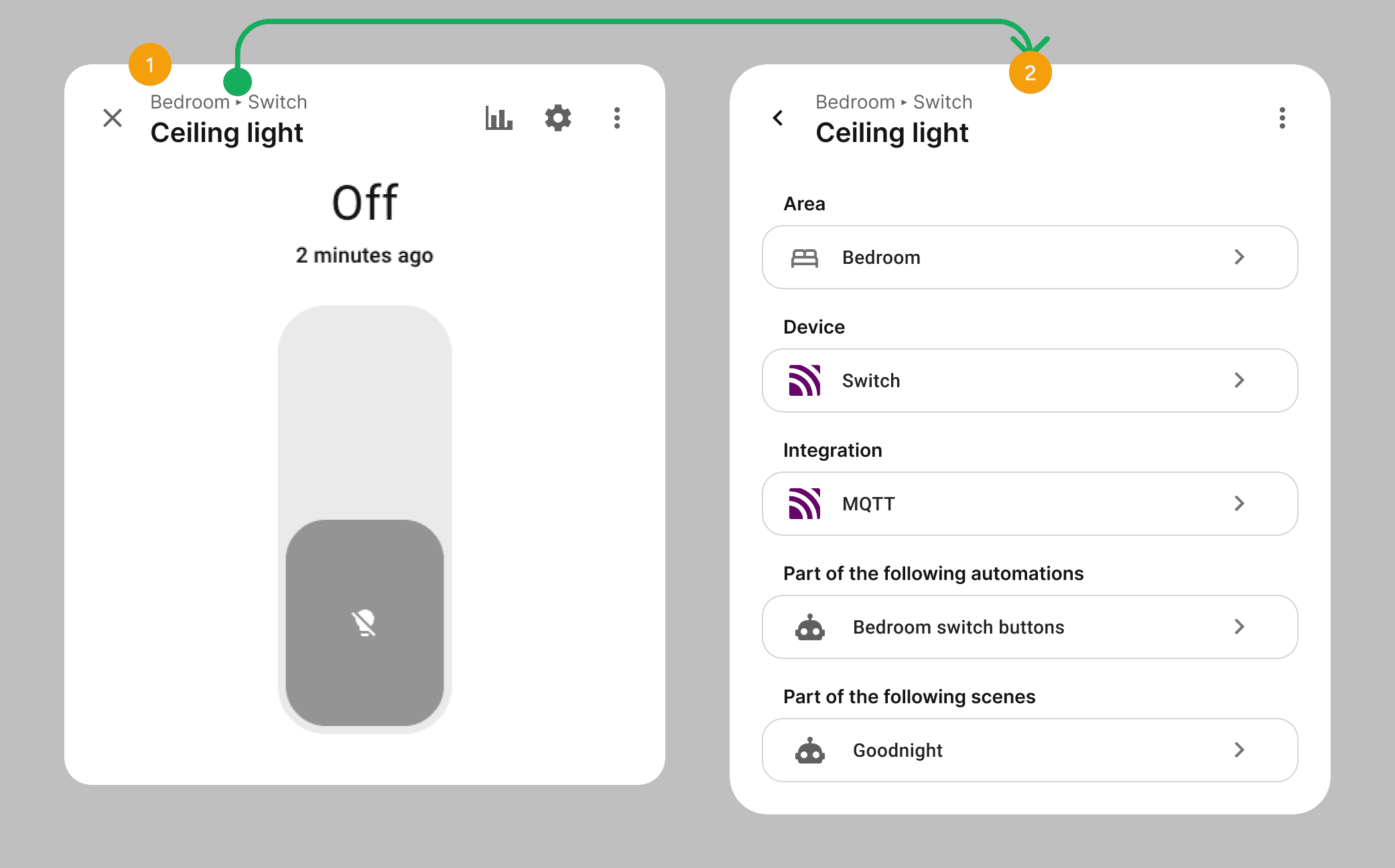

I designed and helped deliver contextual information across several key areas of the Home Assistant UI, providing the right details at the right moment to cut confusion.

DESIGN GOALS

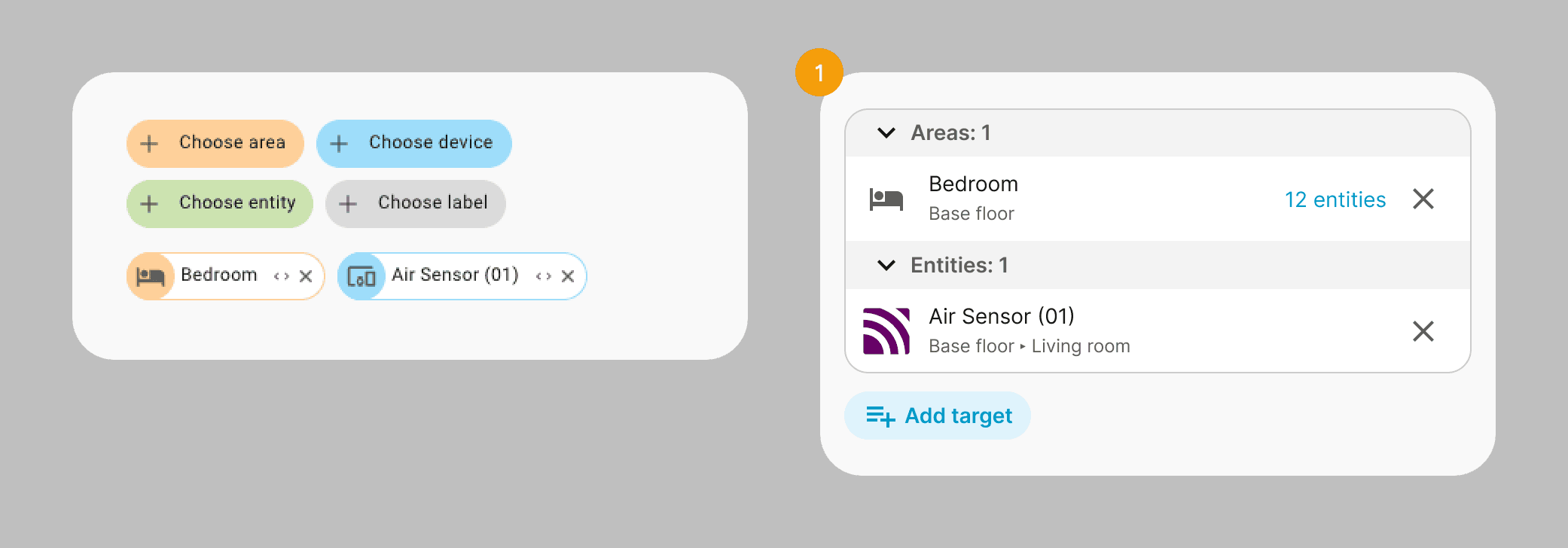

In the Home Assistant UI a user can select to get the data or point to different types of so-called "targets". In versions preceding my work, these UI pattern had some major downfalls in terms of accessibility, readability and clarity of information.

DESIGN GOALS

DELIVER: AUTOMATION EDITOR

Automation editor workstream

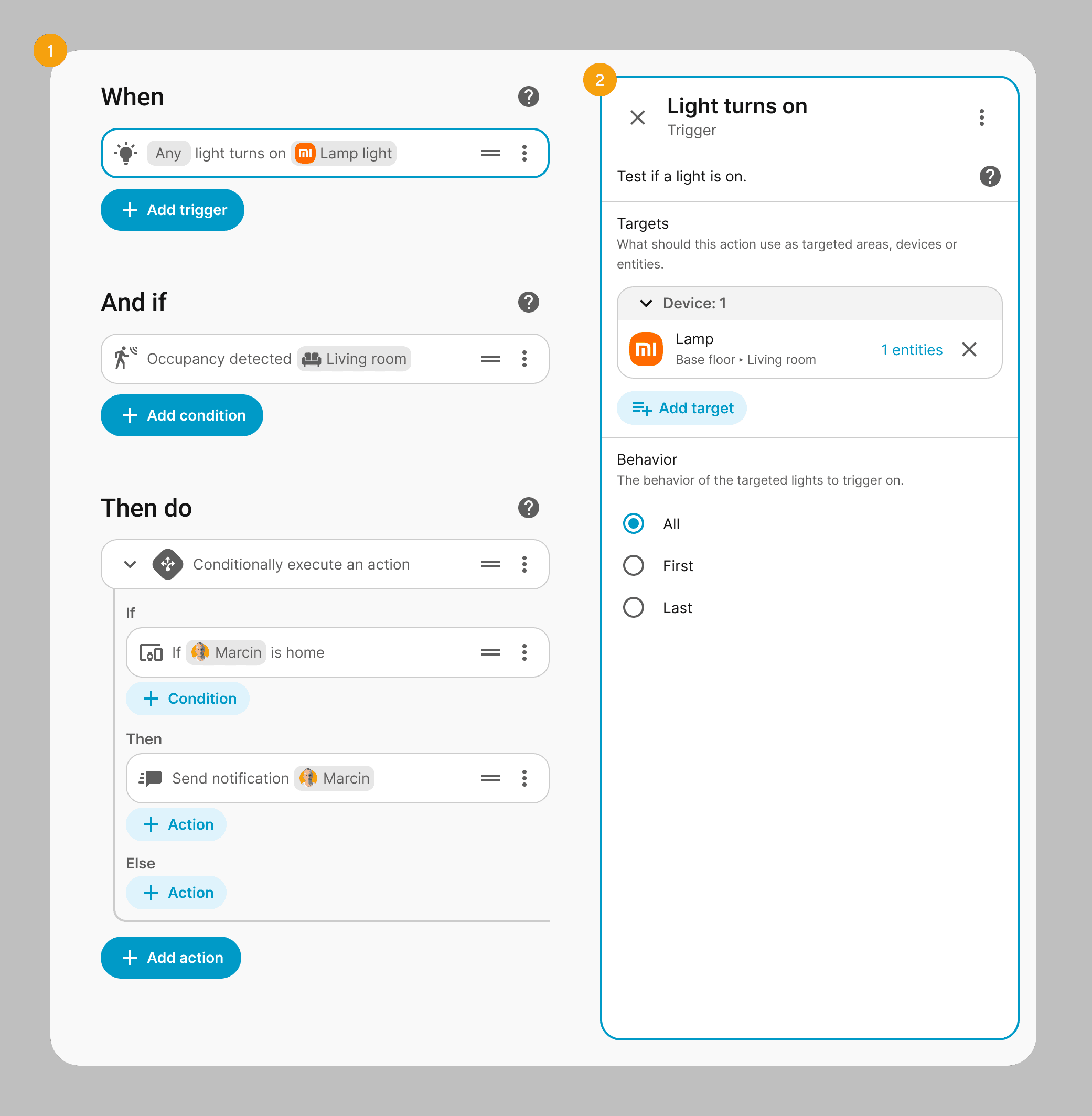

As noted in the define phase, the automation editor redesign also overhauled its visual layout - starting with a simplified list view for triggers, conditions, and actions (1).

Each row's configuration was then moved to a dynamic sidebar (2) that appears on demand, cutting information overload and cognitive load. Mobile-specific patterns, like a resizable bottom-sheet were also introduced to compensate for the lack of real estate on mobile to display all of the information.

DESIGN GOALS

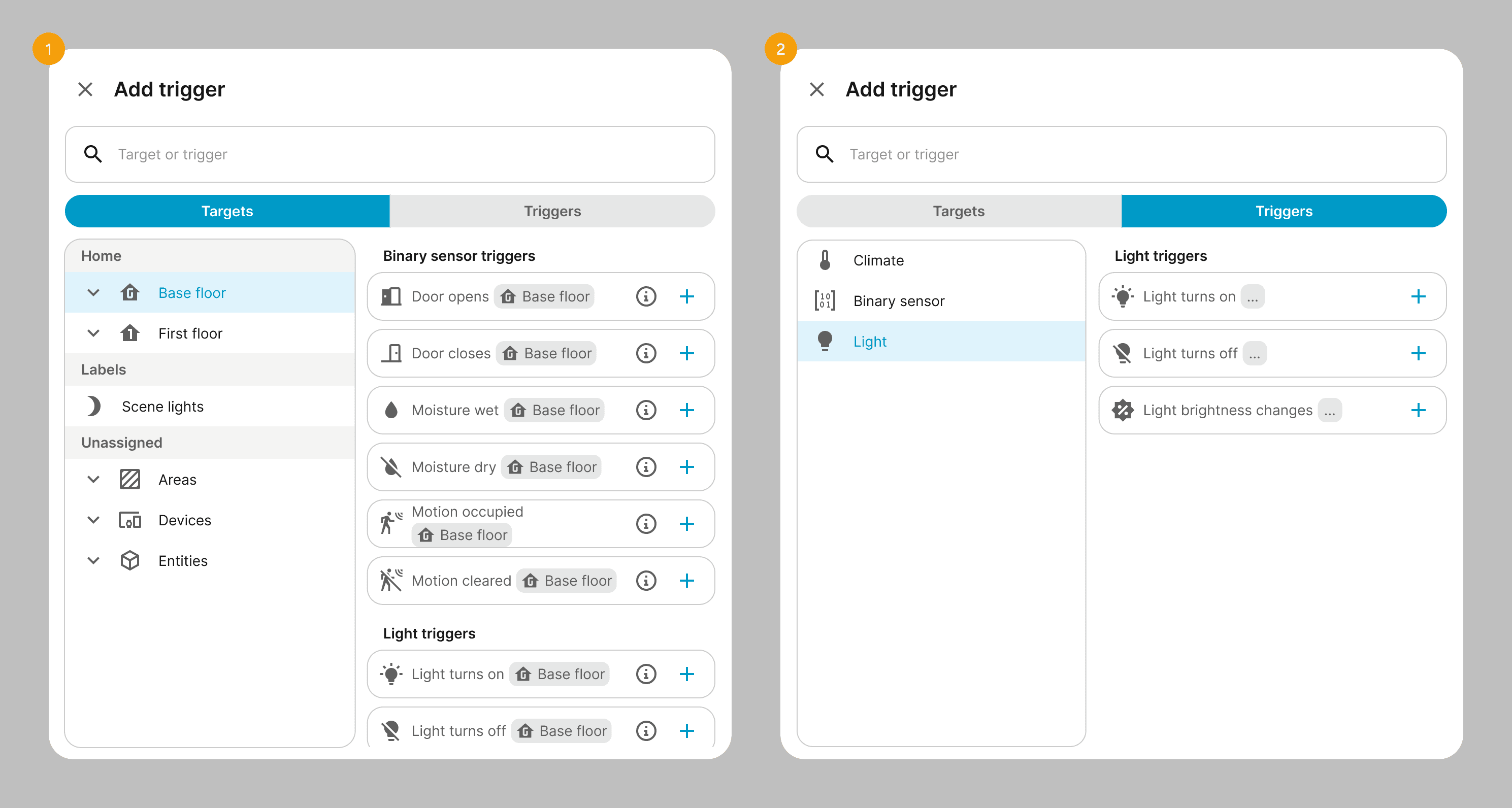

The second part of the work done by me has been devoted to creating a new type of triggers and conditions based on user intent, and not by a technical property of an entity.

To enable that the dialog for adding triggers and conditions has been completely re-worked from the ground up, to enable the user to browse by a tree view of the floor-area-device-entity hierarchy and show available triggers or conditions based on their currently selected child element.

DESIGN GOALS

Challenges & Solutions

Transitioning into a large, legacy open‑source ecosystem meant designing for a global community rather than around it. The main challenge was modernizing the experience without blocking contributors or breaking existing setups.

How I've handled it

QUALITY CONTROL AT SCALE

With hundreds of unique contributors, maintaining UI consistency was difficult.

I shifted from being a sole designer to a 'Design Reviewer,' actively testing Pull Requests (PRs) in VSCode and GitHub. I provided detailed design feedback to contributors, ensuring their code met our quality standards before merging.

How I've handled it

TECHNICAL DEBT

The platform relied on an aging Material Design 2 implementation with accumulating technical debt.

I led the systematic evolution of the UI kit, introducing semantic tokens and upgrading components to improve accessibility (a11y) and minimize breaking changes in theming.

How I've handled it

THE COMPLEXITY CHALLANGE

Home Assistant is powerful but historically intimidating for new users.

To solve this, I launched user surveys to identify pain points for specific product areas and used AI tools (like Cursor and Figma Make) to rapidly prototype simplified flows. This allowed us to validate easier experiences without sacrificing the advanced control power users expect.

Key outcomes and impact of the team

Up next

marcinbauer.com © 2026

Last updated: 20.11.2025